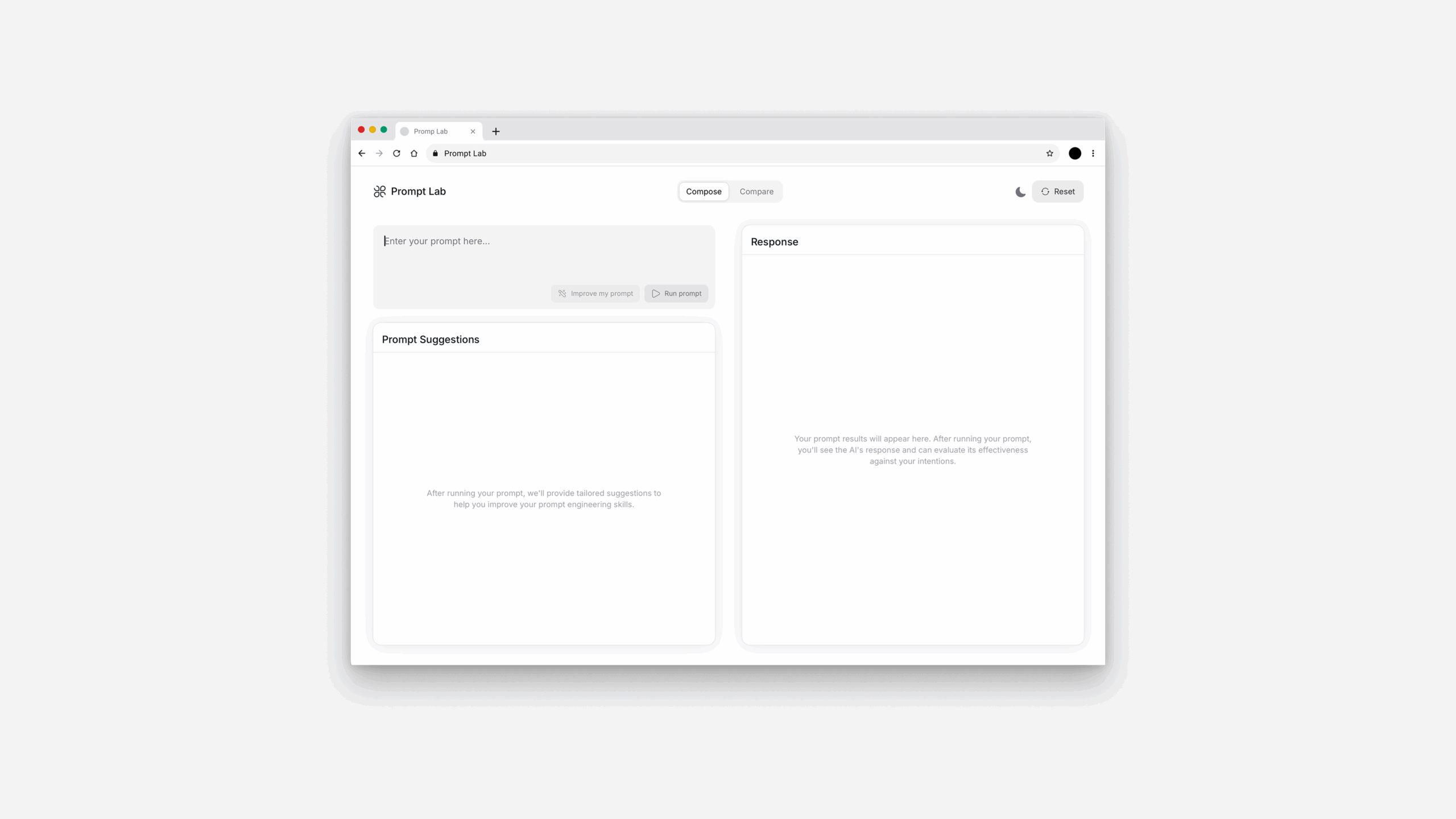

Prompt Lab is an interactive platform designed to develop effective prompt engineering skills through structured comparison, helping non-technical users bridge the literacy gap in human-AI communication.

Role

Researcher & Designer

Affiliation

New York University

Jan 2025 - May 2025

Platform

Web

Team

Solo thesis project with guidance from academic advisor Alex Nathanson

Project

Prompt Lab is an interactive playground designed to help users develop effective communication skills with AI systems by visualizing, comparing, and iteratively improving prompts.

This project was completed as part of my Master of Science in Integrated Design & Media at NYU Tandon School of Engineering.

Challenge

The rapid integration of large language models (LLMs) into professional workflows has created an urgent need for addressing the prompt literacy gap among non-technical users.

Users struggle to articulate clear instructions, provide sufficient context, and iteratively refine prompts

Poor results provide no clear pathway for improvement, creating a negative feedback loop

Inadequate prompt literacy diminishes user agency and control over AI interactions

Current tools focus on templates rather than building transferable communication skills

My role & research

Defining the prompt literacy problem

I conducted a comprehensive literature review of eight studies on LLM interface design to identify critical gaps in current approaches to AI literacy development.

Designing the solution

I led the end-to-end design ande development process, including requirements gathering and user research, system architecture design, interactive prototyping, and user evaluation with professionals.

Evaluating with participants

I designed and implemented a mixed-methods research approach, conducting sessions with 10 professionals from diverse backgrounds to evaluate the effectiveness of the platform.

Research

Throughout the project, I employed various research methodologies: literature analysis of LLM interface design studies, interviews with professionals who regularly use AI tools, think-aloud protocols during task completion, post-task reflection discussions, systematic coding of qualitative data, and quantitative analysis of interaction patterns.

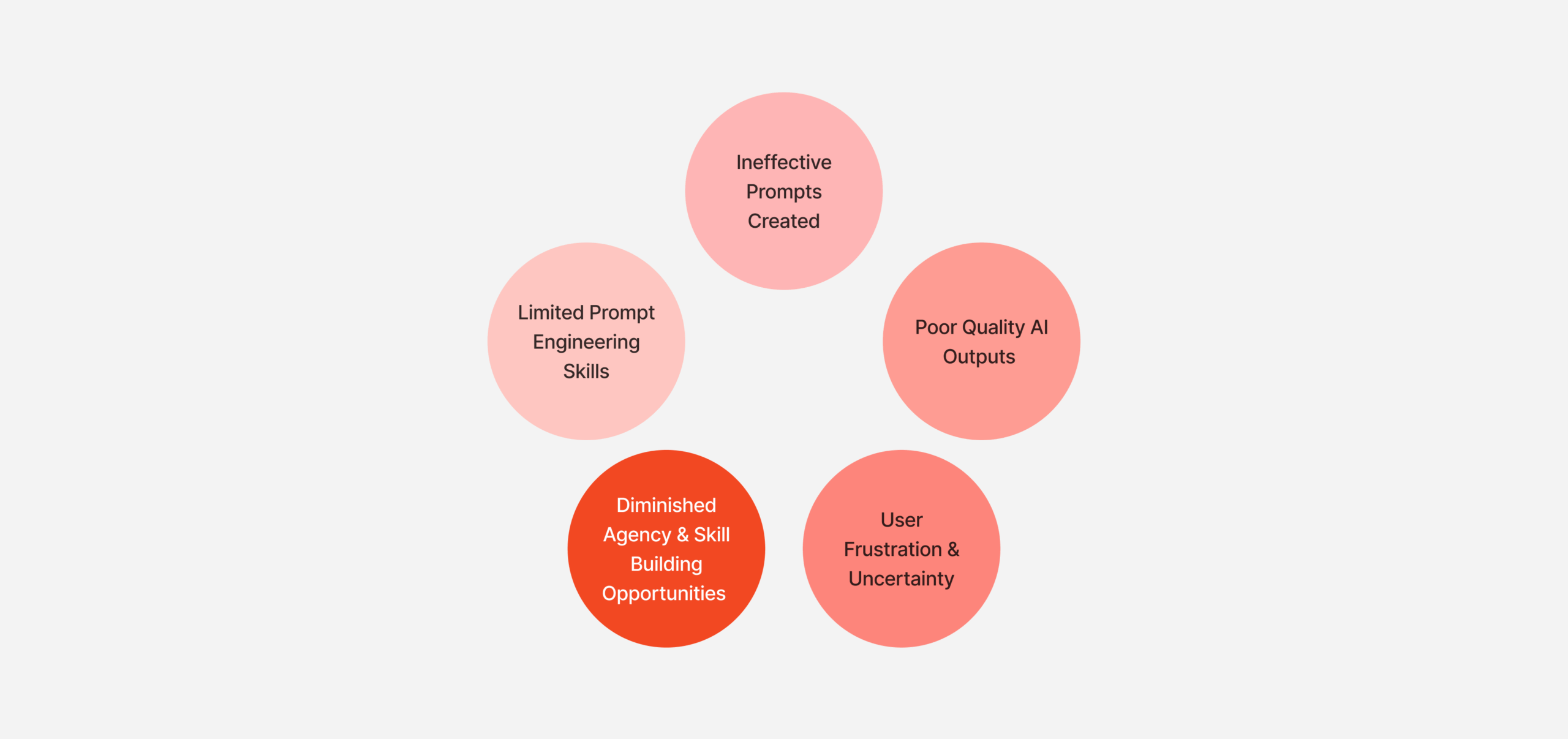

User challenges

Why are non-technical users struggling with effective AI communication?

Skill development challenges

Current approaches provide disconnected features rather than cohesive learning experiences, leading to fragmented skill development.

Guidance vs. exploration imbalance

Highly structured approaches improve success rates but limit creative exploration and learning.

Context-specific evaluation

Users lack frameworks to evaluate communication effectiveness across different AI tasks.

Limited transferability

Users develop platform-specific techniques rather than generalizable communication strategies.

From participants

"Seeing how small changes in structure completely changed the output was more helpful than reading a dozen articles about prompt engineering." — Study Participant

"I'd rather see examples of what works than read abstract guidelines. This gives me a mental checklist I can apply to any prompt I write." — Marketing Professional

"I never realized how much my communication choices affect what the AI gives back. It's made me more thoughtful about how I frame questions." — Administrative Professional

How might we?

Learning through comparison

HMW create interfaces that encourage iterative refinement through systematic comparison?

Balanced guidance

HMW provide context-sensitive, adaptive guidance while preserving creative exploration?

Transferable frameworks

HMW design evaluation frameworks that adapt to different communication contexts?

Skill generalization

HMW emphasize transferable principles rather than platform-specific techniques?

Research findings

Through literature analysis, I identified three critical components for AI literacy development:

Visualization of cause-effect relationships in AI interactions

Interactive learning elements balancing structure with exploration

Comparative evaluation frameworks adaptable to different contexts

Project goals

My project aimed to accelerate prompt engineering learning through visual comparison, develop transferable evaluation frameworks, and foster professional responsibility in AI communication. Success was measured through participants' adoption of structured patterns, transfer of evaluation criteria to workplace scenarios, and increased awareness of communication impact.

Designing

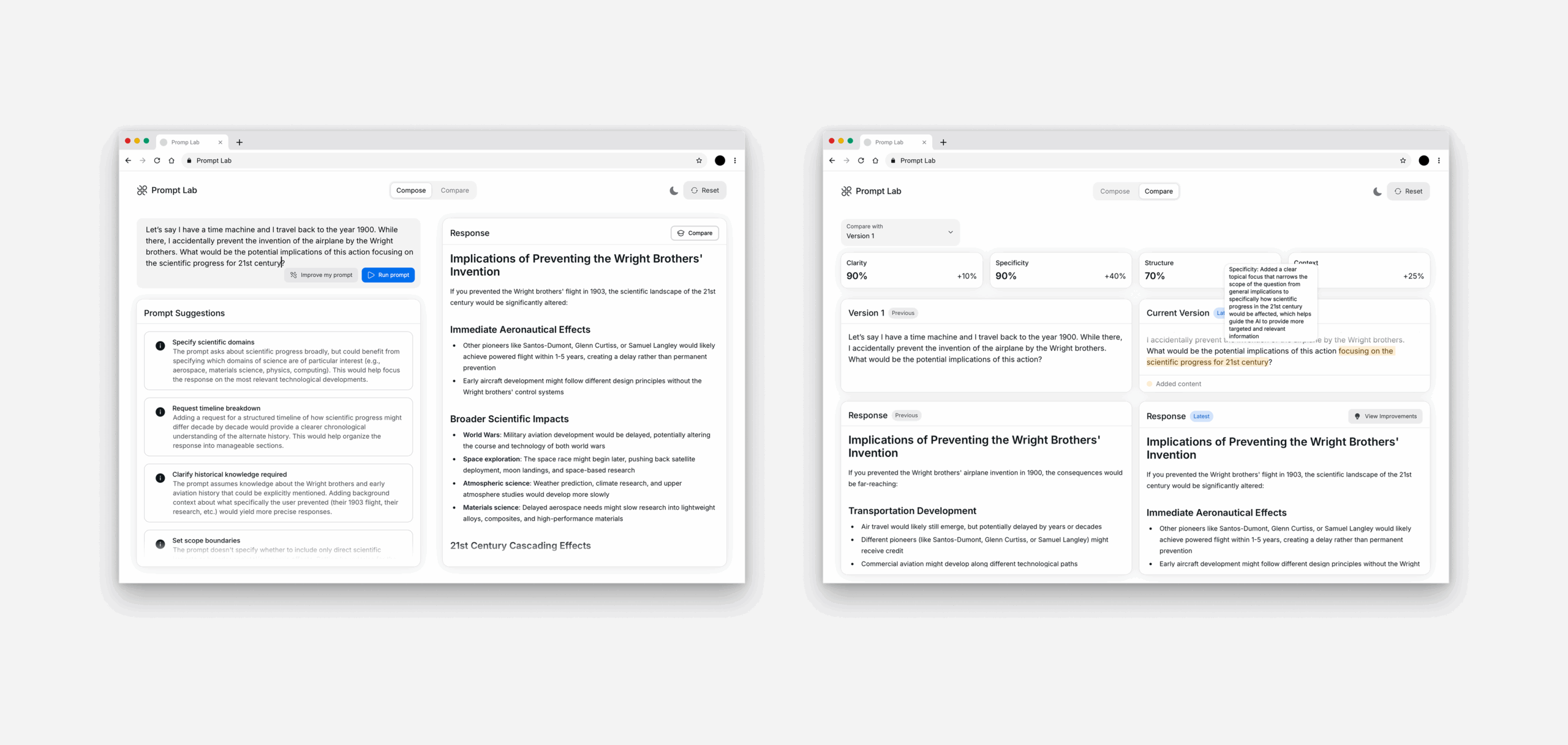

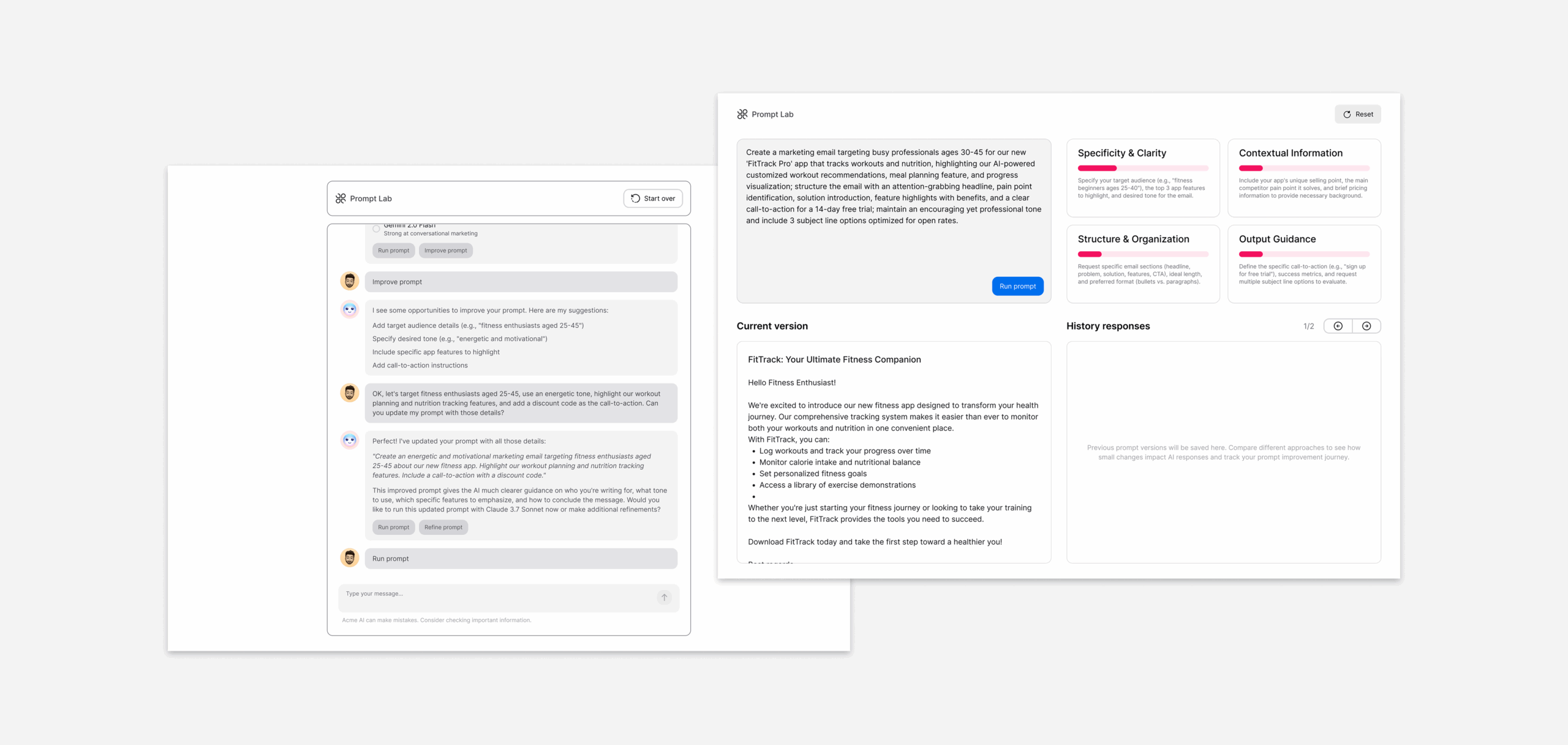

Compose Mode

A focused creation environment with real-time feedback, automatic task detection with context-aware suggestions, and improvement suggestions with impact explanations.

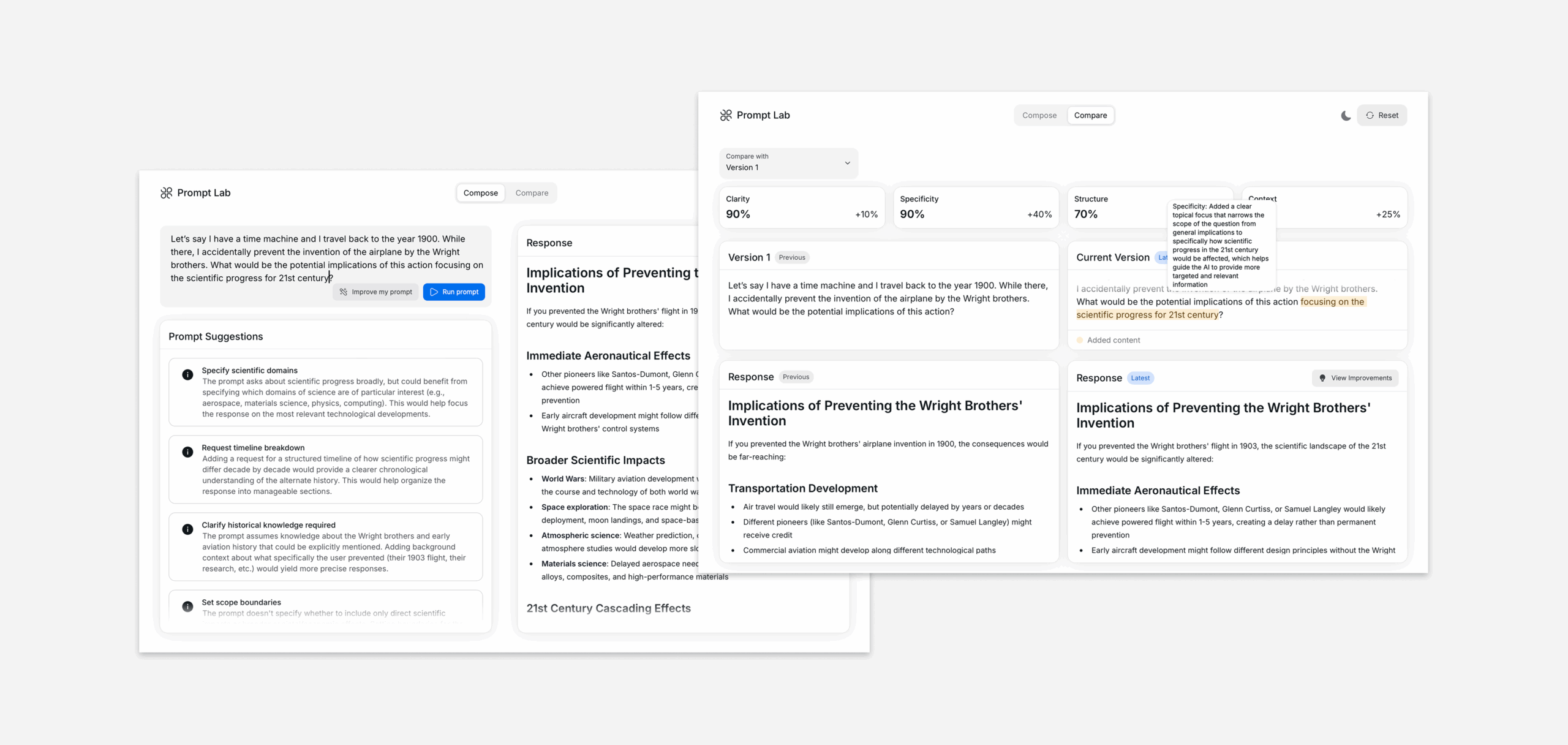

Compare Mode

Side-by-side visualization of prompt versions, differential highlighting to emphasize key changes, and educational annotations explaining improvement principles.

I implemented complementary educational components: a contextual guidance system with task-specific best practices, version management system tracking qualitative improvements, and analysis and metrics visualizing improvement histories.

From

To

Implementation

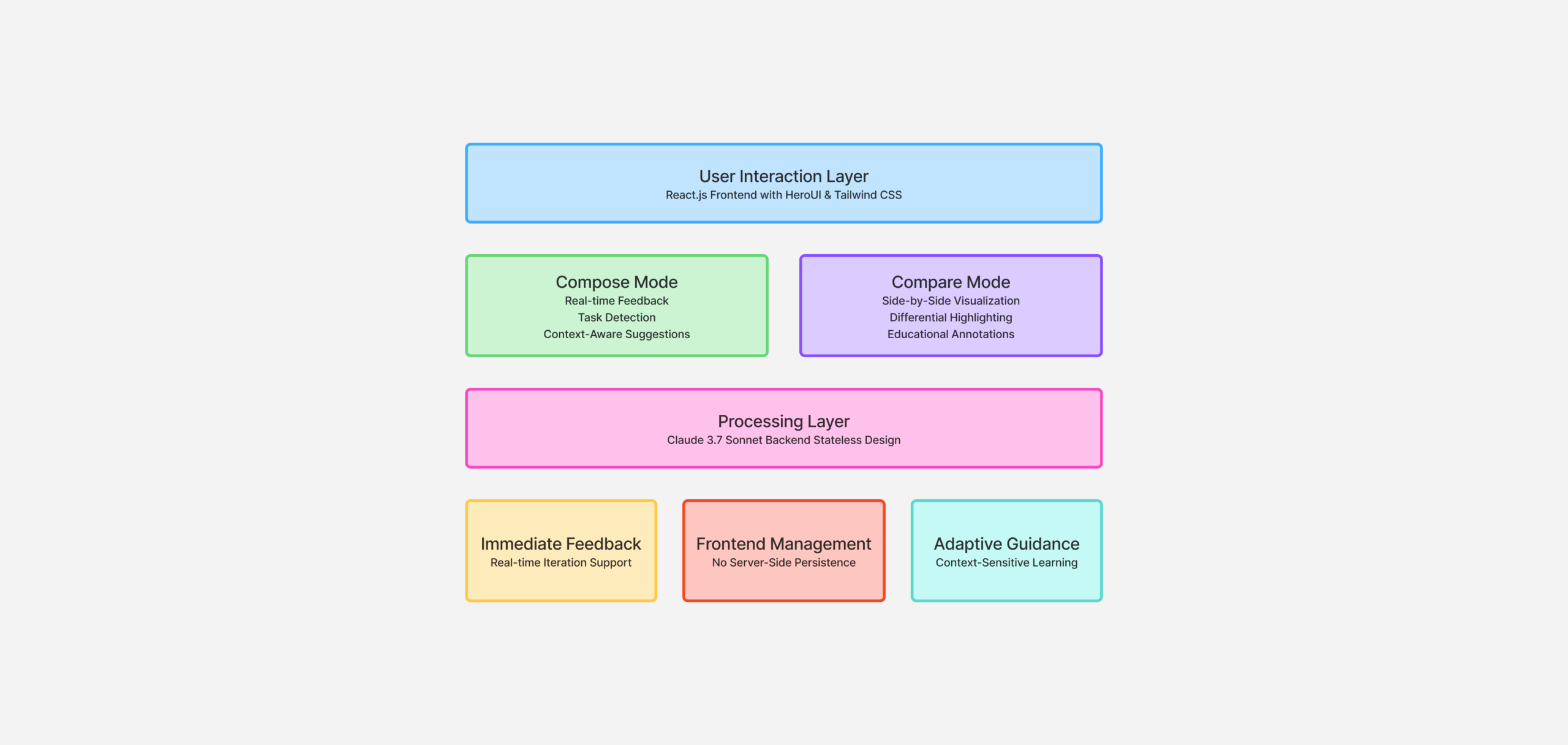

System Architecture

A React.js frontend with HeroUI and Tailwind CSS, integration with Claude 3.7 Sonnet for processing, and a privacy-preserving stateless design.

Dual-Mode Interface

Seamless transition between creation and evaluation contexts, task-specific adaptivity based on detected content, and visualization of cause-effect relationships.

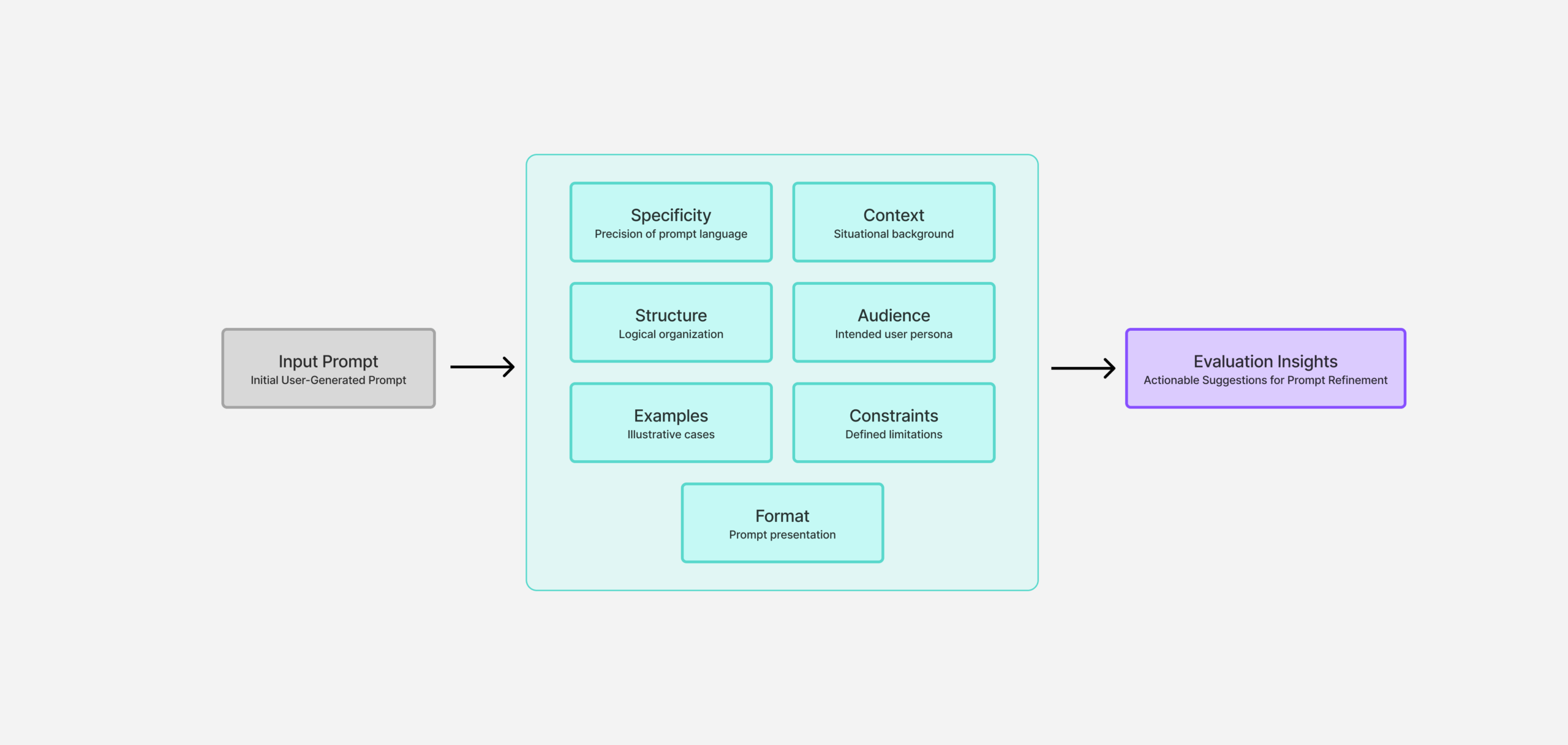

Prompt Analysis Engine

Seven-dimension evaluation across specificity, context, structure, audience, examples, constraints, and format, comparative metrics system quantifying improvements, and visual representation of progress.

Evaluation

I evaluated Prompt Lab through 60-minute sessions with 10 professionals from diverse fields, combining interviews, guided tasks, and reflection discussions. Key observations showed participants engaging more deeply with visual comparisons than written guidance, with 8 of 10 spontaneously improving their prompt structure after visual comparison exercises.

The project addressed the prompt literacy gap through three mechanisms:

Accelerated Learning Through Visual Comparison

Side-by-side comparison emerged as the most powerful learning mechanism, enabling participants to quickly identify effective patterns and develop cause-effect understanding that abstract guidelines couldn't provide.

Transferable Evaluation Frameworks

Structured evaluation categories functioned as "mental checklists," with 9 of 10 participants describing specific ways they would apply these frameworks in their regular work, demonstrating the development of generalizable mental models.

Workflow Integration and Professional Responsibility

Participants demonstrated increased awareness of how their communication choices influence AI outputs, spending nearly twice as long crafting later prompt iterations (from 45 to 92 seconds average) and expressing greater confidence in applying structured approaches across different contexts.

Key outcome

Effective Learning Mechanism

Visual comparison accelerated learning more effectively than abstract guidelines, with 8 of 10 participants adopting improved structure after minimal exposure.

Transferable Mental Models

Structured frameworks provided participants with "mental checklists" applicable across diverse professional contexts.

Professional Responsibility

Visualizing prompt construction techniques fostered increased awareness of user agency in human-AI communication.

Design Principles

Established five evidence-based design principles for interfaces that effectively bridge the prompt literacy gap.

Design implications

Visual process representation is critical

Interfaces that explicitly visualize cause-effect relationships enable users to develop mental models of effective communication patterns more rapidly than abstract guidelines alone.

Layered information architecture enhances learning

Separating primary interaction from explanatory content allows users to maintain focus while accessing deeper insights when needed.

Interactive comparative evaluation builds understanding

Direct comparison of different prompt versions with clear metrics supports systematic understanding of which communication changes produce meaningful differences.

User-controlled transparency builds trust

Providing explanatory mechanisms that users can access on demand respects cognitive load while supporting deeper understanding at appropriate moments.

Context-adaptive guidance ensures relevance

Evaluation frameworks and learning materials that adjust to specific tasks ensure practical application across diverse workplace scenarios.

Contact

© 2025